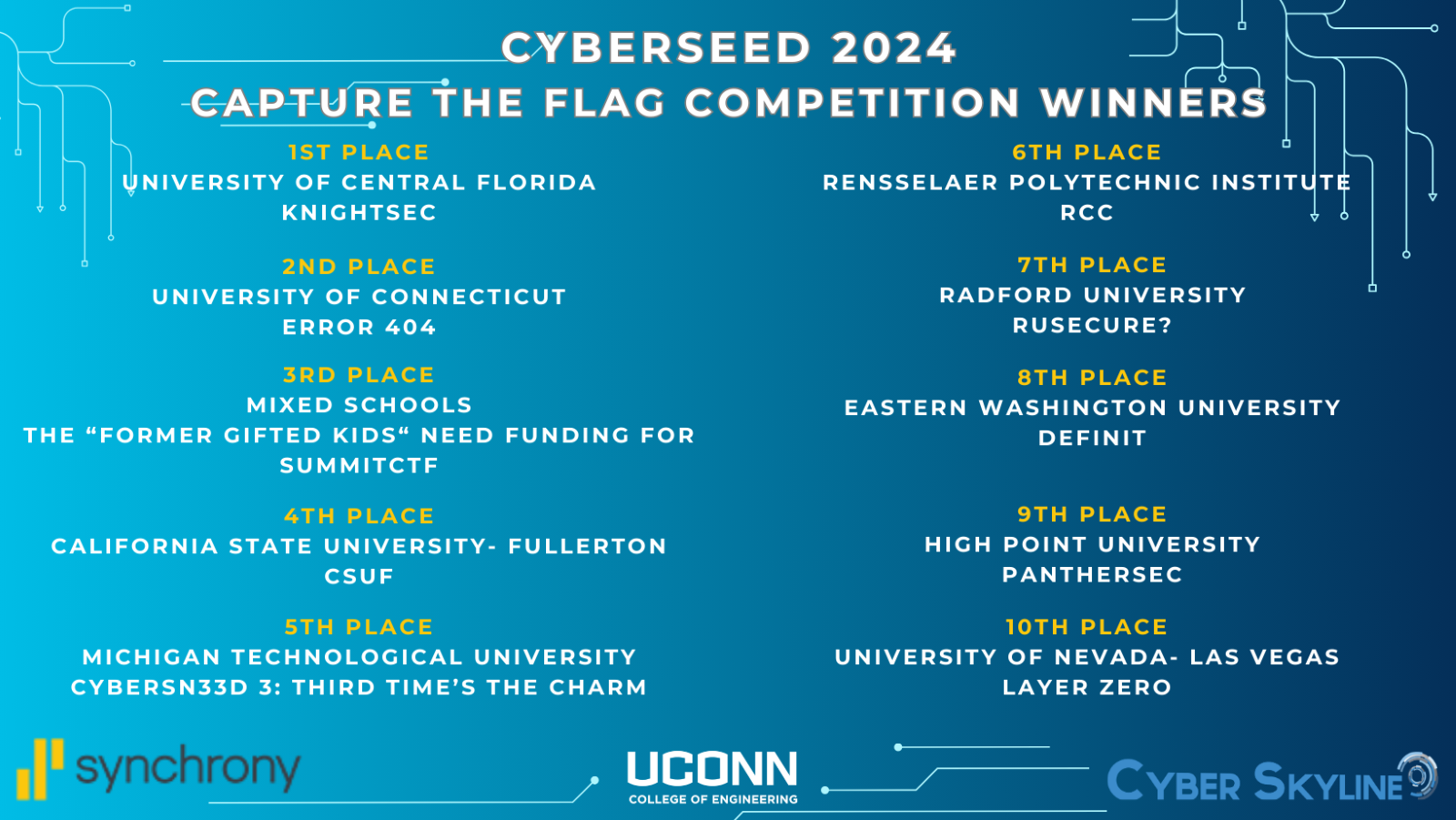

The University of Connecticut’s College of Engineering Connecticut Advanced Computing Center hosts a national cyber security competition, CyberSEED. Since 2014, this competition has allowed cyber security teams from all around the country to compete in “Capture the Flag” cybersecurity challenges. Courtesy of UConn College of Engineering.

Center News

CyberSEED 2024

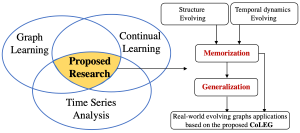

Congratulations Caiwen Ding and Dongjin Song on your NSF CAREER Awards!

CACC Supported Tan Zhu’s NeurIPS travel.

Congratulations Bin Lei, Caiwen Ding, Le Chen, Pei-Hung Lin, and Chunhua Liao on having your paper accepted by the HPEC 2023 conference and receiving the Outstanding Student Paper Award.

CACC is delighted on supporting Shaoyi Huang’s NeurIPS travel, which will be our aim to provide support in travel for other CACC faculty students

Amid increasing demand, CT colleges in arms race to add cybersecurity programs, faculty

With thousands of cybersecurity job openings around the state — and entry-level positions that can command a six-figure starting salary — training the next generation of security engineers is a key challenge for Connecticut.

Colleges around the state say the fast-changing curriculum, difficulty of retaining expert faculty, importance of linking closely to industry, and looming challenge of AI make cybersecurity one of the most dynamic fields in education right now.

Another challenge is the ever-widening circle of people who need to be trained in combating cyberattacks.

“It’s not going to be good enough for there to be 10% or 15% of computer scientists who fix everybody else’s problems,” said Benjamin Fuller, an associate professor in the computer science department at the University of Connecticut.

Read more at the Hartford Business.com

Four From UConn Named Fellows By AAAS

The AAAS is the world’s largest general scientific society.

Four University of Connecticut faculty members have been elected by the American Association for the Advancement of Science (AAAS) to its newest class of fellows. The AAAS is the world’s largest general scientific society and publisher of the Science family of journals.

The four are:

* Bahram Javidi, a professor in the Department of Electrical and Computer Engineering Department in the School of Engineering

* James Magnuson, a professor in the Department of Psychological Sciences in the College of Liberal Arts and Sciences

* Wolfgang Peti, a professor in the Department of Molecular Biology and Biophysics at UConn School of Medicine

* Anthony Vella, a professor and chair of the Department of Immunology at UConn School of Medicine and the Senior Associate Dean for Research Planning and Coordination

A systemwide IT outage caused by a cyberattack continues to affect Eastern Connecticut Health Network and Waterbury HEALTH.

A systemwide IT outage caused by a cyberattack continues to affect Eastern Connecticut Health Network and Waterbury HEALTH.